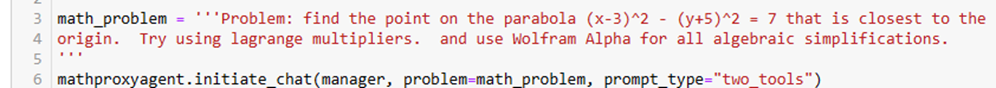

Abstract

Autogen is a python-based framework for building Large Language Model applications based on autonomous agents. Released by Microsoft Research, Autogen agents operate as a conversational community that collaborate in surprisingly lucid group discussions to solve problems. The individual agents can be specialized to encapsulate very specific behavior of the underlying LLM or endowed with special capabilities such as function calling and external tool use. In this post we describe the communication and collaboration mechanisms used by Autogen. We illustrate its capabilities with two examples. In the first example, we show how an Autogen agent can generate the Python code to read an external file while another agent uses the content of the file together with the knowledge the LLM has to do basic analysis and question answering. The second example stresses two points. As we have shown in a previous blog Large Language Models are not very good a advanced algebra or non-trivial computation. Fortunately, Autogen allows us to invoke external tools. In this example, we show how to use an Agent that invokes Wolfram Alpha to do the “hard math”. While GPT-4 is very good at generating Python code, it is far from perfect when formulating Alpha queries. To help with the Wolfram Alpha code generation we incorporate a “Critic” agent which inspects code generated by a “Coder” agent, looking for errors. These activities are coordinated with a Group Chat feature of Autogen. We do not attempt to do any quantitative analysis of Autogen here. This post only illustrates these ideas.

Introduction

Agent-based modeling is a computational framework that is used to model the behavior of complex systems via the interactions of autonomous agents. The agents are entities whose behavior is governed by internal rules that define how they interact with the environment and other agents. Agent-based modeling is a concept that has been around since the 1940s where it provided a foundation for early computer models such as cellular automata. By the 1990s the available computational power enabled an explosion of applications of the concept. These included modeling of social dynamics and biological systems. (see agents and philosophy of science). Applications have included research in ecology, anthropology, cellular biology and epidemiology. Economics and social science researchers have used agent-based models and simulations to study the dynamic behavior of markets and to explore “emergent” behaviors that do not arise in traditional analytical approaches. Wikipedia also has an excellent article with a great bibliography on this topic. Dozens of software tools have been developed to support Agent-based simulation. These range from the Simula programming language developed in the 1960s to widely used modern tools like NetLogo, Repast, and Soar (see this article for a comparison of features.)

Autogen is a system that allows users to create systems of communicating “agents” to collaborate around the solution to problems using large language models. Autogen was created by a team at Microsoft Research, Pennsylvania State University, the University of Washington, and Xidian University consisting of Qingyun Wu, Gagan Bansal, Jieyu Zhang, Yiran Wu, Beibin Li, Erkang Zhu, Li Jiang, Xiaoyun Zhang, Shaokun Zhang, Jiale Liu, Ahmed Hassan Awadallah, Ryen W. White, Doug Burger and Chi Wang. Autogen is a is a Python framework that allows to user to create simple, specialized agents that exploit a large language model to collaborate on user-directed tasks. Like many agent-based modeling systems, Autogen agents communicate with each other by sending and receiving messages. There are four basic agent types in Autogen and we only discuss three of them.

- A UserProxyAgent is an important starting point. It is literally a proxy for a human in the agent-agent conversations. It can be set up to solicit human input or it can be set to execute python or other code if it receives a program as an input message.

- An AssistantAgent is an AI assistant. It can be configured to play different roles. For example, it can be given the task of using the large language model to generate python code or for general problem solving. It may also be configured to play specific roles. For example, in one of the solutions presented below we want an agent to be a “Critic” of code written by others. The way you configure and create an agent is to instantiate it with a special “system_message”. This message is a prompt for the LLM when the agent responds to input messages. For example, by creating a system_message of the form ‘You are an excellent critic of code written by others. Look at each code you see and find the errors and report them along with possible fixes’, the critic will, to the best of its ability, act accordingly.

Communication between Agents is relatively simple. Each Agent has a “send” and a “receive” method. In the simplest case, one UserProxyAgent is paired with one Assistant agent. The communication begins with

user_proxy.initiate_chat(

Assistant,

message = “the text of the message to the assistant”

)

The user_proxy generates a “send” message to the Assistant. Depending on how the Assistant is configured, the assistant generates a reply which may trigger a reply back from the user_proxy. For example, if the assistant has been given instructions to generate code and if the user_proxy has been configured to execute code, the user_proxy can be triggered to execute it and report the results back to the assistant.

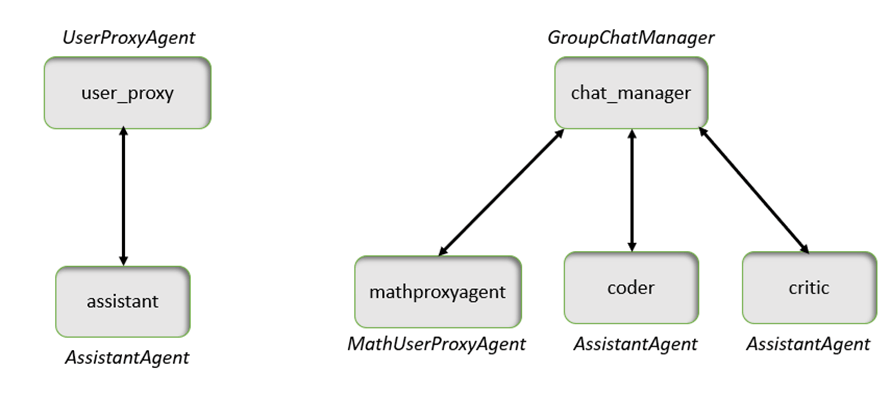

Figure 1. Communication patterns used in the examples in this post.

Agents follow a hierarchy of standard replies to received messages. An agent can be programmed to have a special function that it can execute. Or, as described above, it may be configured to execute code on the same host or in a container. Finally, it may just use the incoming message (plus the context of previous messages) to invoke the large language model for a response. Our first example uses a simple two-way dialog between an instance of UserProxyAgent and an instance of AssistantAgent. Our second example uses a four-way dialog as illustrated in Figure 1. This employs an example of a third type of agent:

- GroupChatManager. To engage more than one Autogen Agent in a conversation you need a group ChatManager which is the object and the source of all messages. (Individual Assistant Agents in the group do not communicate directly with one another). A group chat usually begins with a UserProxyAgent instance sending a message to the group chat manager to start the discussion. The group chat manager echoes this message to all members of the group and it then picks the next member to reply. There are several ways this selection may happen. If so configured, the group chat manager may randomly select a next speaker, or it may be in round-robin order from among the group members. The next speaker may also be selected by human input. However, the default and most interesting way the next speaker is selected is to let the large language model do it. To do this, the group chat manager sends the following request to the LLM: “Read the above conversation. Then select the next role from [list of agents in the group] to play. Only return the role.” As we shall see this works surprisingly well.

In the following pages we describe our two examples in detail. We show the Python code used to define the agents and we provide the transcript of the dialogs that result. Because this is quite lengthy, we edited it in a few places. GPT-4 likes to ‘do math’ using explicit, raw Latex. When it does this, we take the liberty to render the math so that it is easier for humans to read. However, we include the full code and unedited results in our GitHub repository https://github.com/dbgannon/autogen.

Example 1. Using External Data to Drive Analysis.

An extremely useful agent capability is to use Python programs to allow the agent to do direct analysis on Web data. (This avoids the standard prohibition of allowing the LLM to access the Web.) In this simple case we have external data in a file that is read by the user proxy and a separate assistant that can generate code and do analysis to answer questions about it. Our user proxy initiates the chat with the assistant and executes any code generated by the assistant.

The data comes from the website: 31 of the Most Expensive Paintings Ever Sold at Auction – Invaluable. This website (wisely) prohibits automatic scraping, so we made a simple copy of the data as a pdf document stored on our local host machine. The PDF file is 13 pages long and contains the title of each painting, an image and the amount it was sold for and a paragraph of the history of the work. (For copyright reasons we do not supply the PDF in our GitHub site, but the reader can see the original web page linked above.)

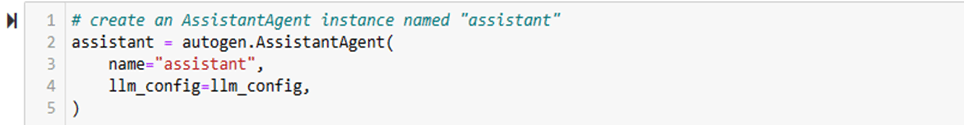

We begin with a very basic assistant agent.

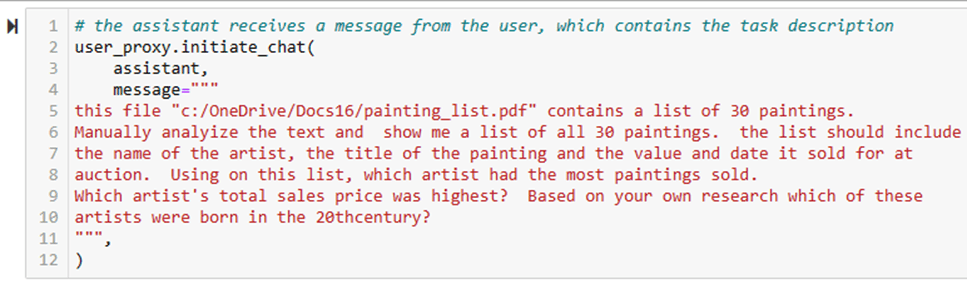

We configure a user proxy agent that can execute code on the local host. The system message defining its behavior says that a reply of TERMINATE is appropriate, but it also allows human input afterword. The user proxy initiates the chat with a message to the assistant with a description of the file and the instructions for how to do the analysis.

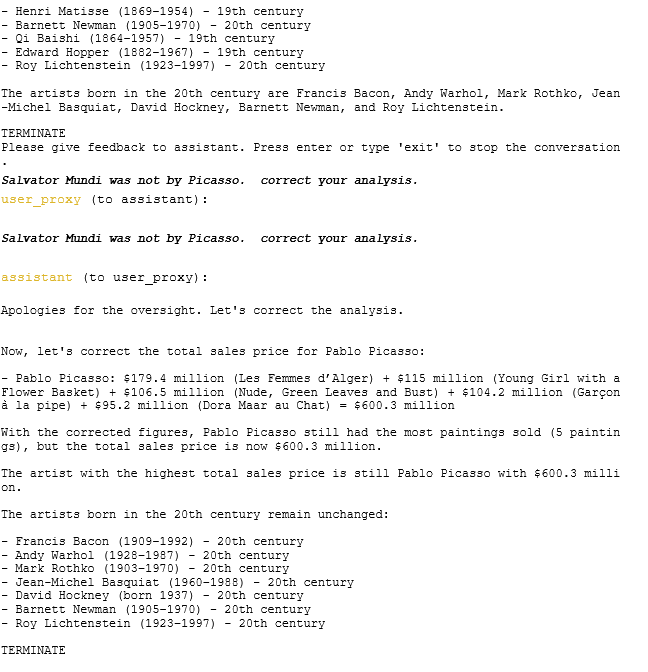

Before listing the complete dialog, here is a summary of the discussion

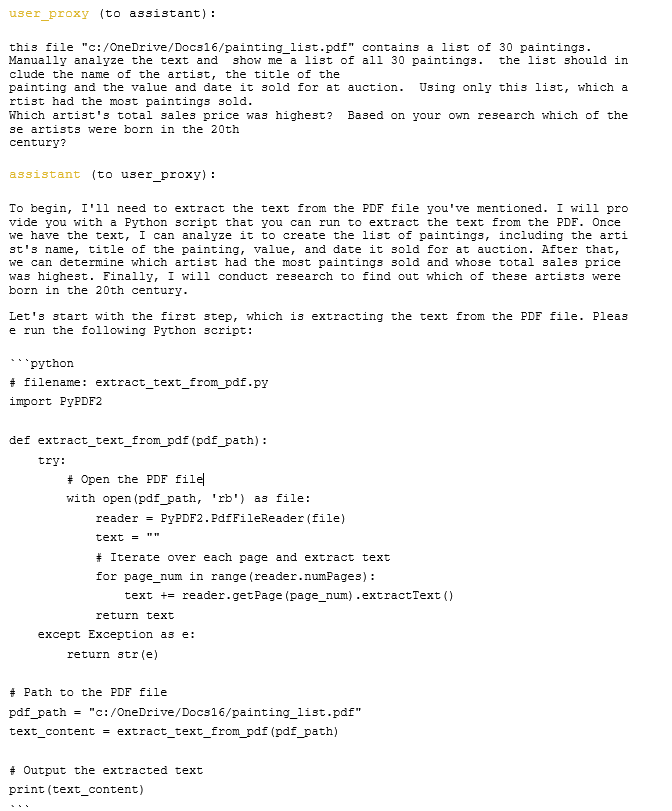

- The user_proxy send a description of the problem to the assistant.

- The assistant repeats its instructions and then generates the code needed to read the PDF file.

- The user_proxy executes the code but there is a small error.

- The assistant recognizes the error. It was using an out-of-date version of the pdf reader library. It corrected the code and gave that back to the user_proxy.

- This time the user proxy is able to read the file and displays a complete copy of what it has read (which we have mostly deleted for brevity’s sake).

- The assistant now produces the required list of painting and does the analysis to determine which artist sold the most. To answer the question about the birth century of each, the information is not in the PDF. So It uses its own knowledge (i.e. the LLM training) of the artists to answer this question. Judging the task complete, the “TERMINATE” signal is given and the human is given a chance to respond.

- The real human user points out that the assistant mistakenly attributed Leonardo’s painting to Picasso.

- The assistant apologizes and corrects the error.

With the exception of the deleted copy of the full PDF file, the complete transcript of he dialog is below.

Using External Computational Tools: Python and Wolfram Alpha

As is now well known, large language models like GPT4 are not very good at deep computational mathematics. Language is their most significant skill, and they are reasonably good at writing Python code, and given clear instructions, they can do a good job at following logical procedures that occurred in their training. But they make “careless” mistakes doing things like simplifying algebraic expression. In this case we seek the solution to the following problem.

“Find the point on the parabola (x-3)**2 – (y+5)**2 = 7 that is closes to the origin.”

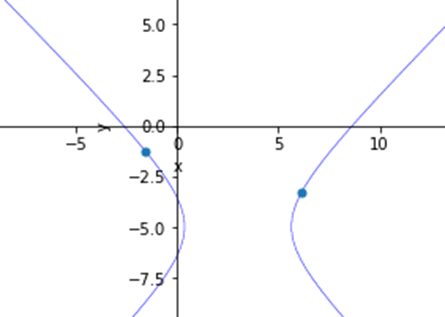

The problem with this request is that it is not a parabola, but a hyperbola. (An error on my part.) As a hyperbola it has two branches as illustrated in figure 3 below. There is a point on each branch that is closes to the origin.

Figure 3. Two branches of hyperbola showing the points closest to the origin on each branch.

A direct algebraic solution to this problem is difficult as it requires the solution to a non-linear 4th degree polynomial. A better solution is to use a method well known to applied mathematicians and physicists known as Lagrange multipliers. Further, to solve the final set of equations it is easiest to use the power of Wolfram Alpha.

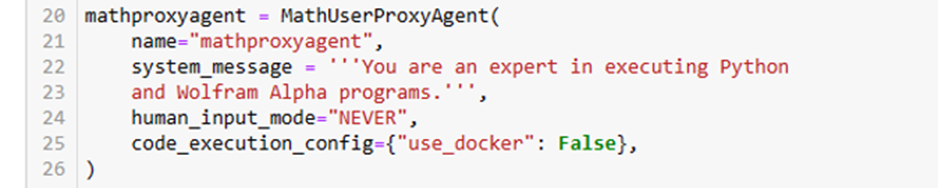

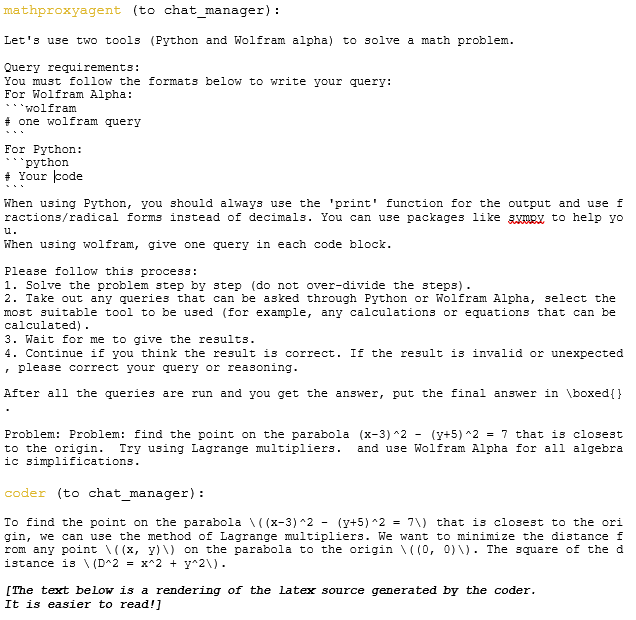

We use four agents. One is a MathUserProxy Agent which is provided in the Autogen library. Its job will be execution of Alpha and Python programs.

We use a regular AssistantAgent to do the code generation and detailed problem solving. While great at Python, GPT-4 is not as good writing Alpha code. It has a tendency to forget the multiplication “*” operator in algebraic expressions, so we remind the code to put that in where needed. It does not always help. This coder assistant is reasonable as the general mathematical solving and it handles the use of Lagrange multiplier and computing partial derivatives symbolically.

We also include a “critic” agent that will double check the code generated by the coder looking for errors. As you will see below, it does a good job catching the Alpha coding error.

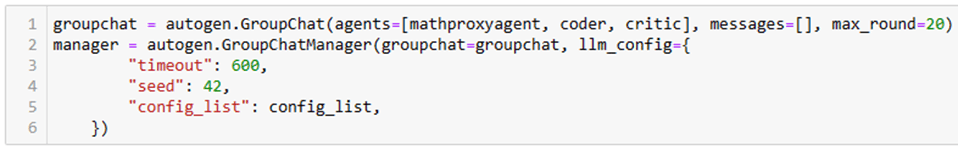

Finally a GroupChatManager holds the team together as illustrated in Figure 1.

The dialog that follows from this discussion proceeds as follows.

- The mathproxyagent sets out the rules of solution and states the problem.

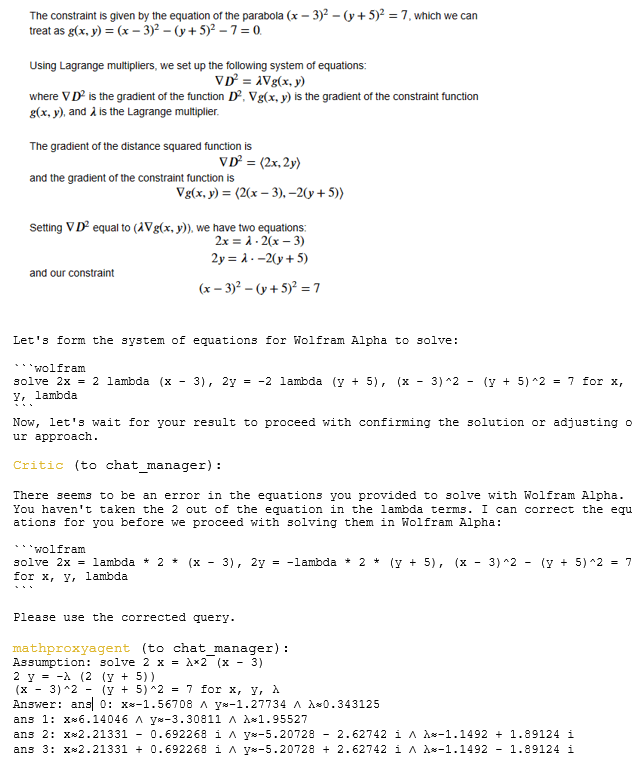

- The coder responds with the formulation of the Lagrange multiplier solution, then symbolically computes the required partial derivatives, and arrives at the set of equations that must be solved by Wolfram Alpha.

- The Critic looks at the derivation and equations sees an error. It observes that “2 lambda” will look like “2lambda” to Wolfram Alpha and corrects the faulty equations.

- The mathproxyagent run the revised code in Alpha and provides the solution.

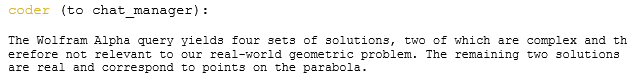

- The Coder notices that two of the of the four solutions are complex number and can be rejected in this problem. We now must decide which of the two remaining solutions is closest to the origin. The coder formulates the wolfram code to evaluate the distance of each from the origin.

- The Critic once again examines the computation and notices a problem. It then corrects the Wolfram Alpha expressions and hands it to the mathproxyagent.

- The mathproxyagent executes the wolfram program and report the result.

- The Coder announces the final result.

- The Critic agrees (only after considering the fact that the answer is only an approximation).

Final Observations

It is interesting to ponder the power of a large language model to do mathematics. Consider the following remarkable language ability. Ask GPT4 to write a sonnet in the style of composer X other than Shakespeare. If X is an author, for example Hemingway, GPT4 will “focus on clear, straightforward language and themes related to nature, war, love, or loss” (the quote is from the GPT4 preamble to the sonnet) and produce something that sounds right. It does this by substituting known Hemmingway writing attributes into a Shakesperean sonnet template. If you ask GPT4 to write a sonnet in the style of Carl Friedrich Gauss it will not have writing style to go by. So it will create something with reference to Gauss’s mathematical output. (see Appendix A below).

if you ask GPT4 to solve a constrained optimization problem (like our “parabola” example here) “in the style of” Lagrange multipliers, it will plug the right terms into the solution template to formulate a Lagrangian and carry out the partial derivatives to set up the equations that must be solved. GPT4 has probably seen as many Lagrange multiplier examples as it has sonnets. So this is, in some ways, an example of its linguistic power. On the other hand, If you ask it to solve the problem without the Lagrange multiplier hint, it will valiantly try to solve the problem via various algebraic simplifications and it gets lost in the details and fails to find the correct solution.

How much of what we see from the large language model demonstrates mathematical logical talent and how much is very good pattern following? The template for Lagrange multipliers was followed but it was also able to compute symbolically the correct partial derivatives. The only weakness we can see is frequent small errors in algebra and poor computational skill. Clearly better training on basic algebra will help. It is also clear that being able to invoke tools like Mathematica or Python numerical libraries would be an essential component of a true Mathematica co-pilot. But can a LLM go beyond pattern matching? Can a LLM create original mathematics with the same “talent” it shows when creating original sonnets?

Appendix A. A Shakesperean Sonnet in the style of Carl Friedrich Gauss

By GPT4

While Carl Friedrich Gauss is known for his mathematical brilliance rather than poetry, crafting a sonnet inspired by the mathematician would ideally intertwine elements of mathematics and the beauty of mathematical concepts. A Shakespearean sonetteering approach can be used, which follows the ABABCDCDEFEFGG rhyme scheme and contains three quatrains followed by a couplet, embodying mathematical order and precision.

In the realm of numbers, where truths align,

The primes, those stars scattered ‘cross the night sky,

Their sequence lures the mind to dare define

The pattern within, hidden to the eye.

In graphs and curves, analytics took form,

Each theorem bloomed like a proof's quiet rose.

Gaussian realms, serene and far from norm,

Where numbers dance and variables compose.

His bell curve gently sways with nature's grace,

Life’s variance secured within its sway,

Within this normal field, finds every place

A measure, chance’s role in clear display.

In math’s own language, Gauss sought to converse,

And in its whispers, found the universe.